Selected Projects

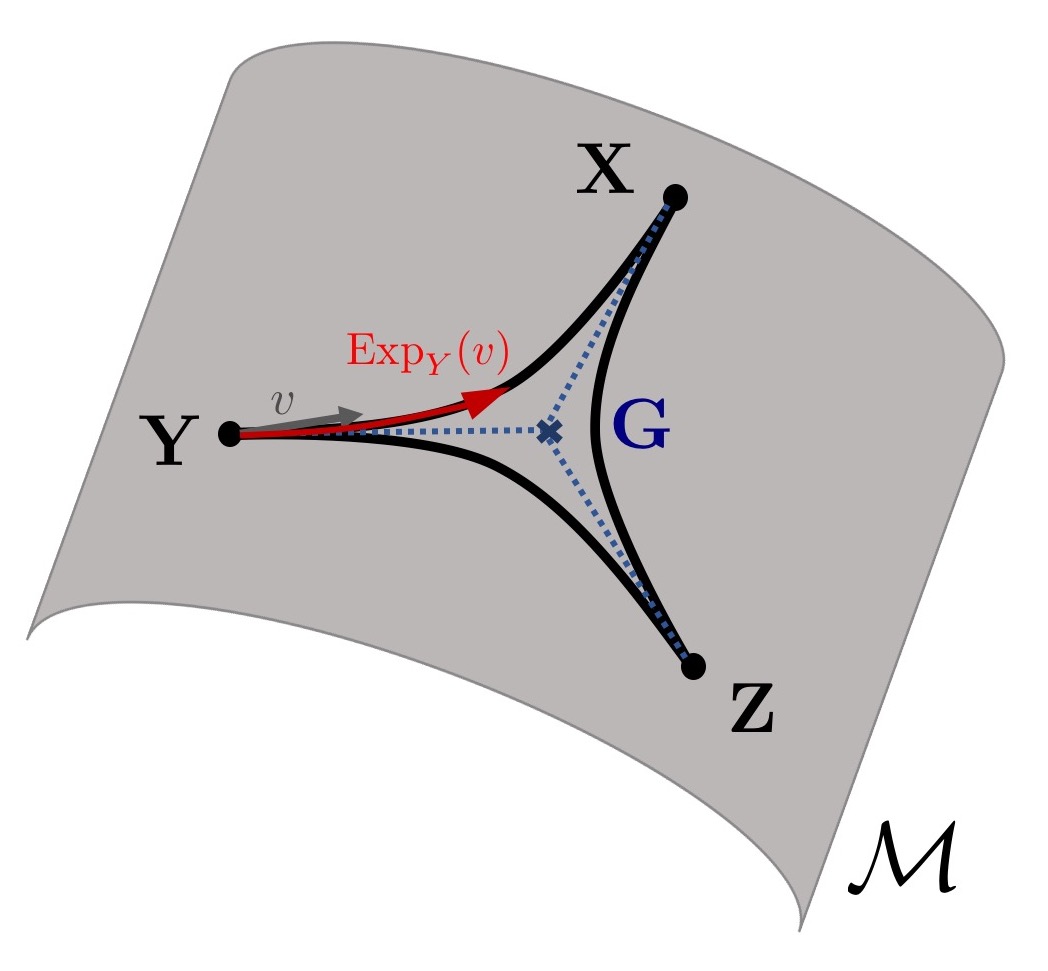

Constrained Optimization on Manifolds

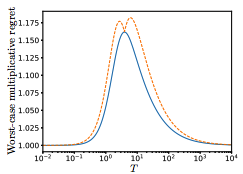

Riemannian Frank-Wolfe methods for nonconvex and g-convex optimization.

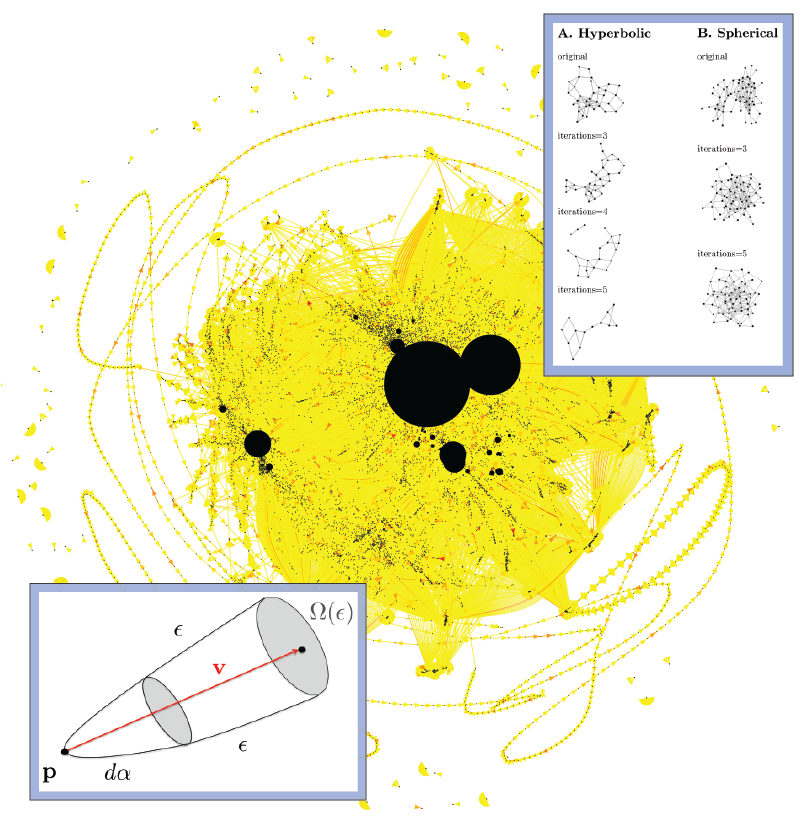

Discrete Geometry and Machine Learning on Graphs

Discrete Ricci curvature for a curvature-based analysis of complex networks.

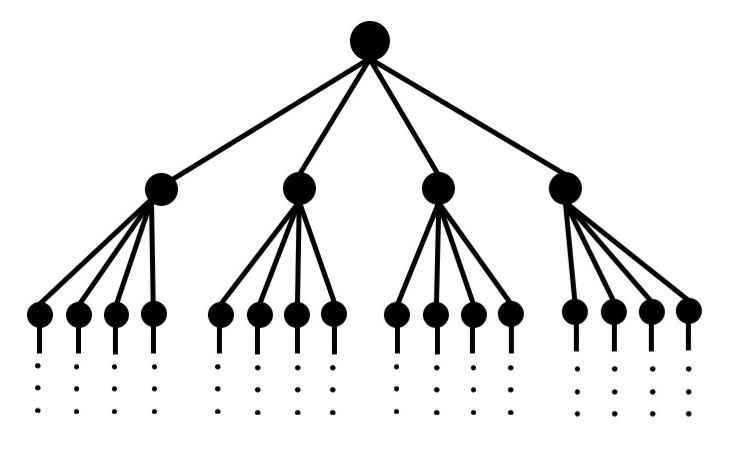

Machine Learning in Non-Euclidean Spaces

Harnessing the geometric structure of data in Machine Learning.